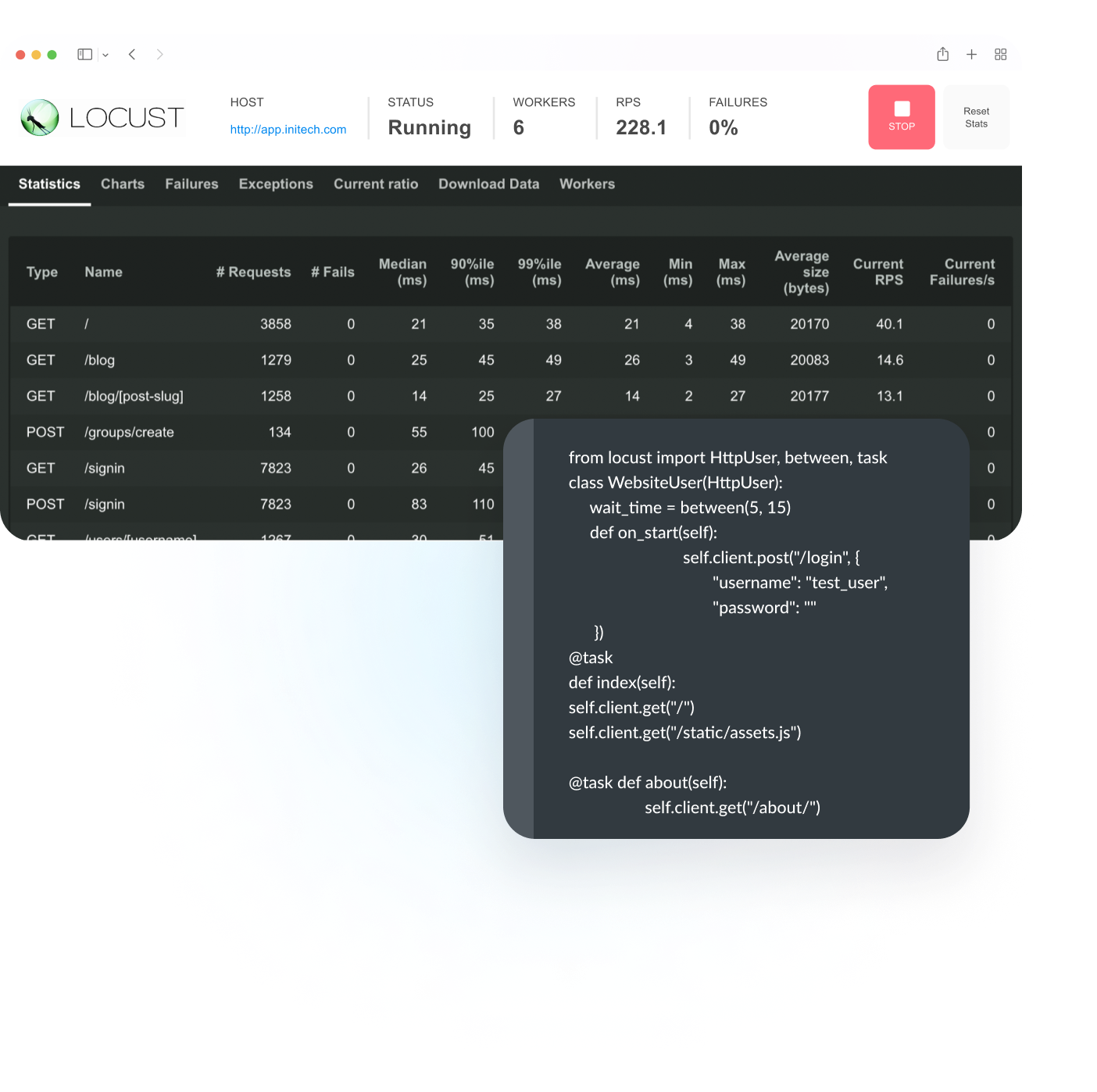

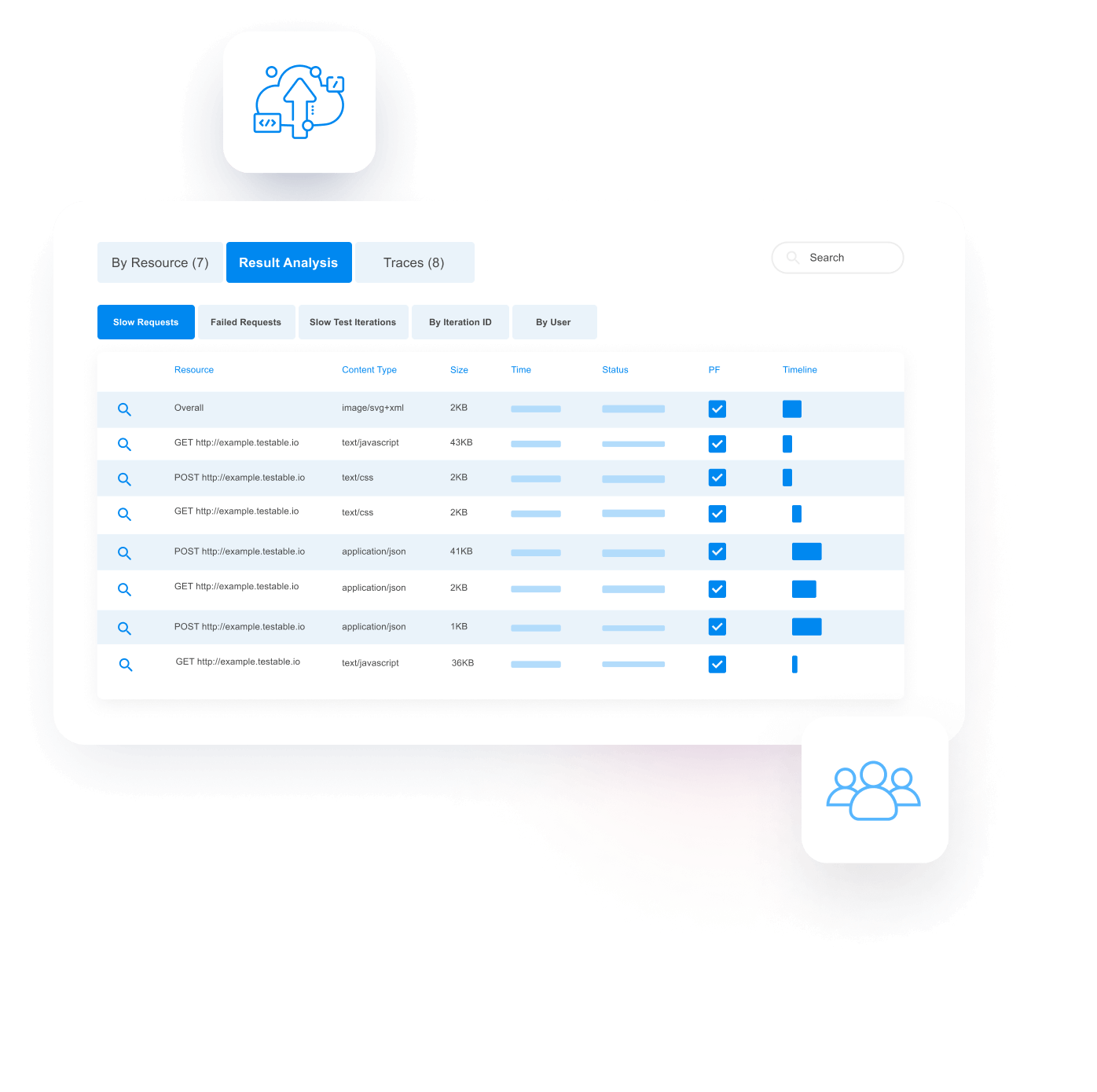

Traces

A full trace includes all metrics, and a timeline view of the request that includes all request/response headers + body. Testable intelligently samples to ensure you have at least one example for every combination of URL + response code but without capturing so many traces that it affects the test performance. Having these traces can be really useful to analyze and debug application errors.